All Of The Above Questions

Writing Good Multiple Choice Exam Questions

Cite this guide: Brame, C. (2013) Writing expert multiple selection test questions. Retrieved [todaysdate] from https://cft.vanderbilt.edu/guides-sub-pages/writing-skilful-multiple-choice-test-questions/.

- Constructing an Effective Stalk

- Constructing Effective Alternatives

- Additional Guidelines for Multiple Choice Questions

- Considerations for Writing Multiple Choice Items that Test Higher-order Thinking

- Additional Resources

Multiple pick test questions, also known as items, can be an effective and efficient way to appraise learning outcomes. Multiple selection examination items have several potential advantages:

Versatility: Multiple pick exam items can exist written to assess various levels of  learning outcomes, from bones recall to application, assay, and evaluation. Because students are choosing from a prepare of potential answers, however, there are obvious limits on what can be tested with multiple option items. For example, they are not an effective way to test students' ability to organize thoughts or clear explanations or artistic ideas.

learning outcomes, from bones recall to application, assay, and evaluation. Because students are choosing from a prepare of potential answers, however, there are obvious limits on what can be tested with multiple option items. For example, they are not an effective way to test students' ability to organize thoughts or clear explanations or artistic ideas.

Reliability: Reliability is defined every bit the degree to which a exam consistently measures a learning outcome. Multiple selection test items are less susceptible to guessing than true/false questions, making them a more reliable means of assessment. The reliability is enhanced when the number of MC items focused on a single learning objective is increased. In addition, the objective scoring associated with multiple choice test items frees them from bug with scorer inconsistency that tin plague scoring of essay questions.

Validity: Validity is the caste to which a exam measures the learning outcomes information technology purports to measure. Because students can typically reply a multiple pick item much more speedily than an essay question, tests based on multiple choice items can typically focus on a relatively wide representation of grade material, thus increasing the validity of the assessment.

The fundamental to taking advantage of these strengths, however, is construction of good multiple choice items.

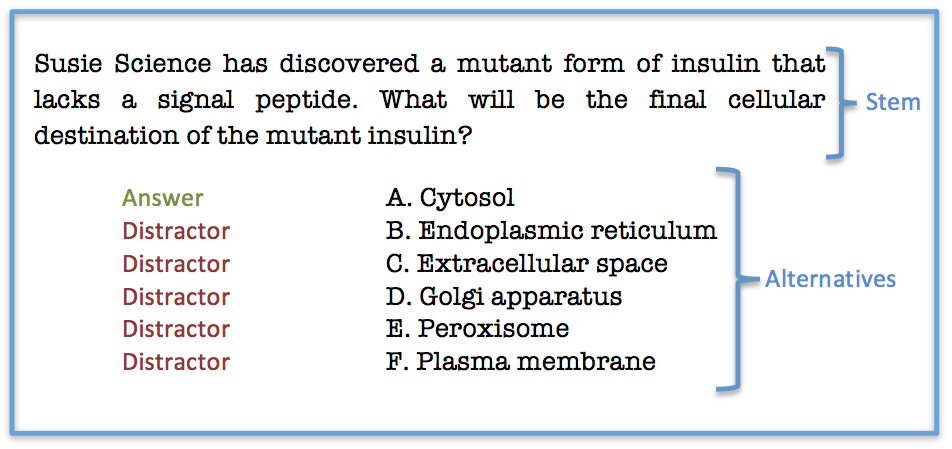

A multiple choice item consists of a problem, known as the stalk, and a list of suggested solutions, known as alternatives. The alternatives consist of i correct or best alternative, which is the answer, and incorrect or inferior alternatives, known as distractors.

Amalgam an Effective Stalk

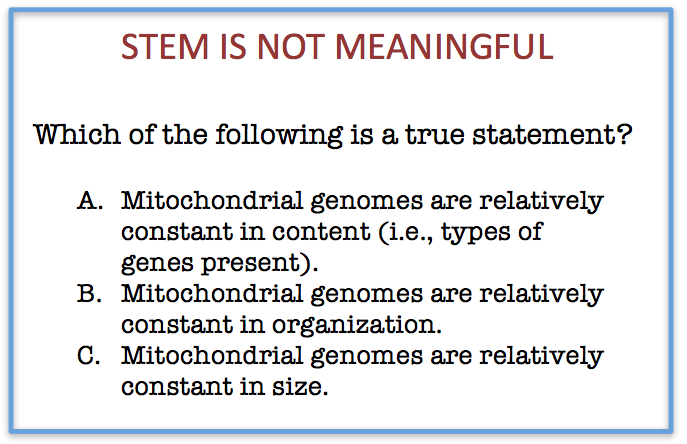

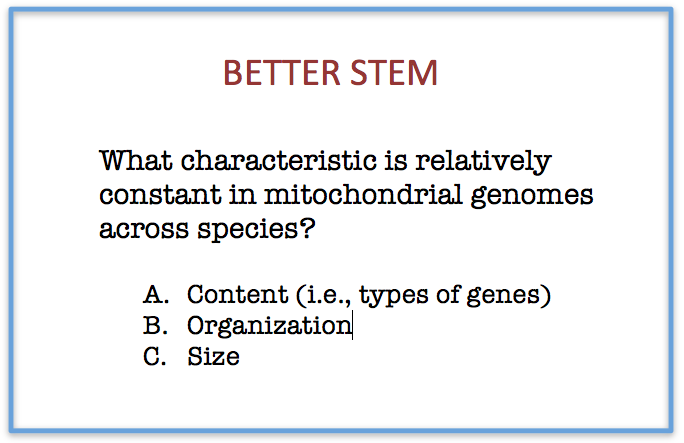

1. The stalk should be meaningful past itself and should present a definite problem. A stalk that presents a definite problem allows a focus on the learning outcome. A stalk that does not present a articulate problem, yet, may test students' ability to draw inferences from vague descriptions rather serving as a more straight test of students' achievement of the learning issue.

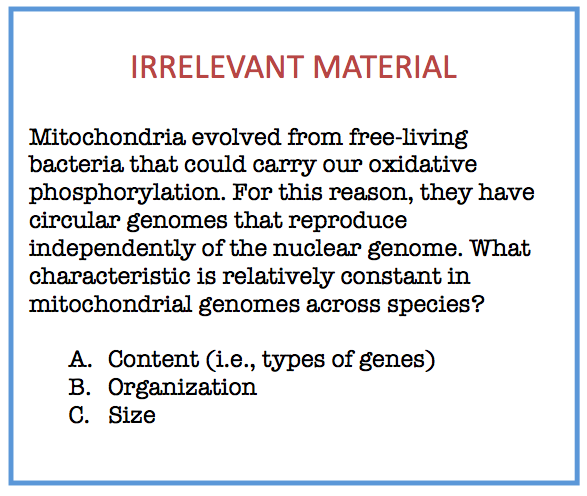

two. The stem should not contain irrelevant material, which can decrease the reliability and the validity of the test scores (Haldyna and Downing 1989).

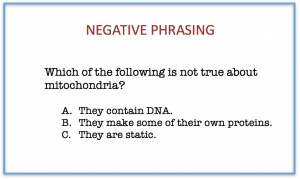

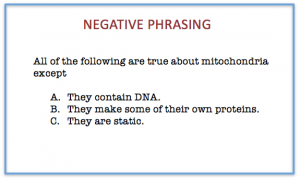

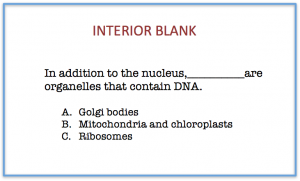

iii. The stem should exist negatively stated only when significant learning outcomes require it. Students oftentimes have difficulty understanding items with negative phrasing (Rodriguez 1997). If a significant learning outcome requires negative phrasing, such every bit identification of dangerous laboratory or clinical practices, the negative element should be emphasized with italics or capitalization.

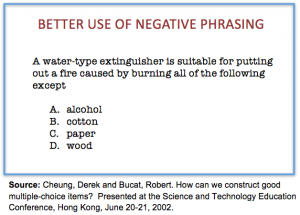

iv. The stem should exist a question or a partial sentence. A question stalk is preferable considering information technology allows the student to focus on answering the question rather than property the partial sentence in working retentivity and sequentially completing it with each alternative (Statman 1988). The cognitive load is increased when the stem is constructed with an initial or interior bare, then this construction should be avoided.

Constructing Effective Alternatives

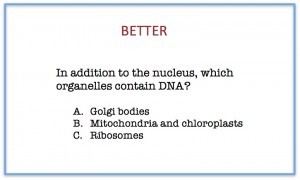

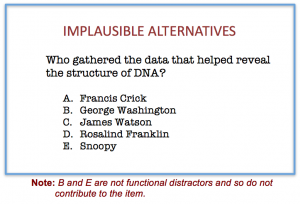

ane. All alternatives should be plausible. The function of the wrong alternatives is to serve as distractors,which should be selected past students who did not achieve the learning outcome just ignored by students who did achieve the learning outcome. Alternatives that are implausible don't serve as functional distractors and thus should non be used. Common student errors provide the best source of distractors.

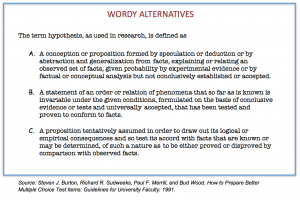

two. Alternatives should be stated clearly and concisely. Items that are excessively wordy assess students' reading power rather than their attainment of the learning objective

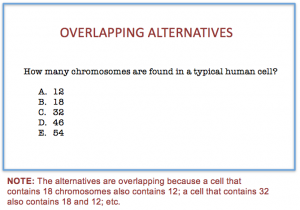

3. Alternatives should exist mutually exclusive. Alternatives with overlapping content may exist considered "fox" items by examination-takers, excessive utilise of which tin can erode trust and respect for the testing process.

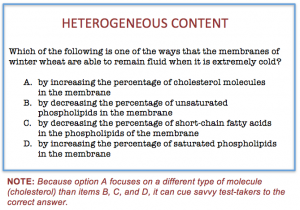

4. Alternatives should exist homogenous in content. Alternatives that are heterogeneous in content can provide cues to pupil virtually the correct respond.

5. Alternatives should be free from clues about which response is right. Sophisticated test-takers are alarm to inadvertent clues to the correct respond, such differences in grammer, length, formatting, and language choice in the alternatives. It'southward therefore important that alternatives

- have grammar consequent with the stem.

- are parallel in form.

- are like in length.

- use similar language (e.one thousand., all unlike textbook language or all like textbook language).

6. The alternatives "all of the above" and "none of the in a higher place" should non be used. When "all of the higher up" is used as an respond, test-takers who tin can place more than than one alternative as correct tin can select the correct answer fifty-fifty if unsure about other culling(southward). When "none of the above" is used as an alternative, test-takers who tin eliminate a single option can thereby eliminate a second selection. In either case, students can use fractional knowledge to make it at a right reply.

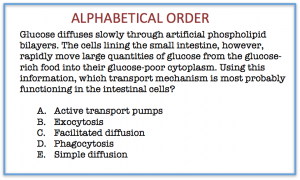

vii. The alternatives should exist presented in a logical lodge (e.g., alphabetical or numerical) to avoid a bias toward certain positions.

8. The number of alternatives can vary among items as long equally all alternatives are plausible. Plausible alternatives serve every bit functional distractors, which are those chosen by students that have not achieved the objective merely ignored by students that accept achieved the objective. There is little deviation in difficulty, discrimination, and test score reliability among items containing two, three, and four distractors.

Additional Guidelines

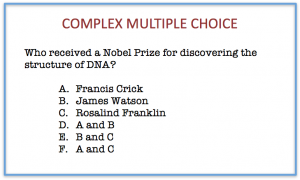

1. Avert complex multiple choice items , in which some or all of the alternatives consist of different combinations of options. As with "all of the above" answers, a sophisticated test-taker can apply partial knowledge to achieve a correct answer.

ii. Keep the specific content of items independent of one another. Savvy test-takers tin can apply data in ane question to answer another question, reducing the validity of the test.

Considerations for Writing Multiple Selection Items that Test College-gild Thinking

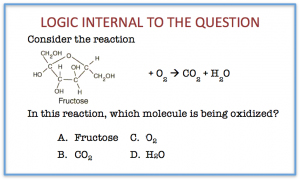

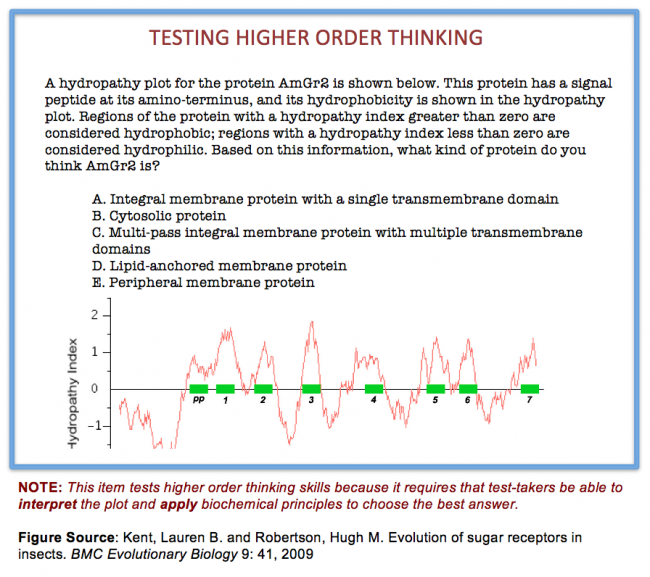

When writing multiple choice items to test college-order thinking, design questions that focus on higher levels of cognition every bit defined past Bloom'south taxonomy. A stem that presents a trouble that requires application of class principles, analysis of a problem, or evaluation of alternatives is focused on higher-lodge thinking and thus tests students' ability to do such thinking. In constructing multiple choice items to exam college order thinking, it can also be helpful to pattern problems that crave multilogical thinking, where multilogical thinking is defined every bit "thinking that requires noesis of more one fact to logically and systematically apply concepts to a …problem" (Morrison and Free, 2001, page 20). Finally, designing alternatives that require a high level of discrimination can also contribute to multiple choice items that examination higher-order thinking.

Additional Resources

- Burton, Steven J., Sudweeks, Richard R., Merrill, Paul F., and Wood, Bud. How to Prepare Better Multiple Choice Test Items: Guidelines for University Faculty, 1991.

- Cheung, Derek and Bucat, Robert. How tin nosotros construct adept multiple-choice items? Presented at the Science and Engineering Education Briefing, Hong Kong, June twenty-21, 2002.

- Haladyna, Thomas One thousand. Developing and validating multiple-pick exam items, 2nd edition. Lawrence Erlbaum Assembly, 1999.

- Haladyna, Thomas M. and Downing, Southward. Grand.. Validity of a taxonomy of multiple-choice item-writing rules. Practical Measurement in Education, ii(ane), 51-78, 1989.

- Morrison, Susan and Free, Kathleen. Writing multiple-choice test items that promote and measure critical thinking. Journal of Nursing Pedagogy 40: 17-24, 2001.

This teaching guide is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

All Of The Above Questions,

Source: https://cft.vanderbilt.edu/guides-sub-pages/writing-good-multiple-choice-test-questions/

Posted by: brittainverea1994.blogspot.com

0 Response to "All Of The Above Questions"

Post a Comment